Table of Contents

- The Silent Killer in Your AI Stack

- What the Hell is TOON Anyway?

- Why This Actually Matters

- The Four Fighters: TOON vs JSON vs CSV vs YAML

- Format Showdown: When to Use What

- Real Numbers: The TOON Savings Calculator

- Use Case Battle Royale

- The Visual Proof

- How to Actually Implement TOON

- The Honest Trade-Offs

- Quick Reference Cheat Sheet

- Your Next Steps

The Silent Killer in Your AI Stack

You know that feeling when your LLM API bill arrives and you think, “Wait, I’m paying HOW much just to send data back and forth?”

Yeah. Me too.

Here’s the thing nobody tells you when you’re building AI products: the way you format your data matters more than you think. Like, way more. We’re not talking about a 5% optimization here—we’re talking about cutting your costs in half while making your AI more reliable.

Picture this: You’re building a RAG system. You retrieve 50 documents from your vector database. Each document has metadata—title, author, date, score, tags. You format it as JSON because, well, that’s what everyone does.

{

"results": [

{"id": "doc1", "title": "Getting Started", "score": 0.94, "author": "Sarah"},

{"id": "doc2", "title": "Advanced Tips", "score": 0.91, "author": "Mike"},

{"id": "doc3", "title": "Best Practices", "score": 0.89, "author": "Lisa"}

// ... 47 more documents

]

}

Looks fine, right?

Wrong.

You just sent the words "id", "title", "score", and "author" fifty times each to your LLM. That’s 200 repetitions of the same structural information. You’re not paying for data—you’re paying for JSON’s verbose syntax.

This is the silent tax every AI team pays. And most don’t even know it’s happening.

What the Hell is TOON Anyway?

TOON (Token-Oriented Object Notation) is what happens when someone finally asks: “What if we designed a data format specifically for LLMs instead of just using what we’ve always used?”

Here’s that same data in TOON:

results[3]{id,title,score,author}:

doc1,Getting Started,0.94,Sarah

doc2,Advanced Tips,0.91,Mike

doc3,Best Practices,0.89,Lisa

See what just happened? We declared the structure once at the top, then just listed the values. No curly braces repeated 50 times. No field names repeated 200 times.

Same data. 66% fewer characters. 30-60% fewer tokens.

Your API bill just got slashed. Your context window just got bigger. Your AI can now handle more information per request.

TOON in Plain English

Think of TOON as a hybrid:

- The structure clarity of JSON (you know what fields exist)

- The compactness of CSV (no repeated field names)

- The readability of YAML (humans can actually parse it)

- Built-in schema awareness (the

[3]tells your LLM “expect exactly 3 items”)

And here’s the kicker: it’s losslessly convertible to and from JSON. You don’t have to rewrite your entire stack. You convert at the edge, right before sending to your LLM.

Why This Actually Matters

Let me be brutally honest: I’m skeptical of “revolutionary” new formats. We don’t need another standard competing in the XKCD comic about standards.

But TOON isn’t trying to replace JSON everywhere. It’s solving a specific, expensive problem: LLMs charge by the token, and traditional formats waste tokens on structural overhead.

The Three Big Wins

1. Unit Economics

- GPT-4 costs ~$0.03 per 1K input tokens

- Cutting token usage by 40% = cutting costs by 40%

- At scale, that’s real money: $10K/month → $6K/month

2. Context Window Freedom

- Claude has 200K tokens of context

- JSON fills that up fast with structural noise

- TOON lets you fit 1.5-2x more actual data in the same window

3. Reliability Boost

- Explicit array lengths (

[50]) reduce hallucinations - LLMs know exactly how many items to expect

- Schema declarations improve parsing accuracy

This isn’t theory. Teams are reporting these results in production.

The Four Fighters: TOON vs JSON vs CSV vs YAML

Let’s break down all four formats with the kind of comparison table you’ll actually use.

| Format | Token Efficiency | Structure Support | LLM Training | Human Readable | Best For |

|---|---|---|---|---|---|

| TOON | ⭐⭐⭐⭐⭐ (Highest) | ⭐⭐⭐⭐ (Rich) | ⭐⭐⭐ (Growing) | ⭐⭐⭐⭐ (Good) | LLM prompts, RAG, agent memory |

| JSON | ⭐⭐ (Baseline) | ⭐⭐⭐⭐⭐ (Best) | ⭐⭐⭐⭐⭐ (Universal) | ⭐⭐⭐ (OK) | APIs, mixed data, deep nesting |

| CSV | ⭐⭐⭐⭐⭐ (Highest) | ⭐ (Flat only) | ⭐⭐⭐⭐ (High) | ⭐⭐⭐⭐⭐ (Simple) | Pure tables, logs, metrics |

| YAML | ⭐⭐⭐ (Variable) | ⭐⭐⭐⭐ (Flexible) | ⭐⭐⭐ (Medium) | ⭐⭐⭐⭐⭐ (Best) | Config files, human editing |

The Syntax Side-by-Side

Let’s look at the same user dataset in all four formats:

TOON:

users[2]{id,name,role,active}:

1,Alice,admin,true

2,Bob,user,false

JSON:

{

"users": [

{"id": 1, "name": "Alice", "role": "admin", "active": true},

{"id": 2, "name": "Bob", "role": "user", "active": false}

]

}

CSV:

id,name,role,active

1,Alice,admin,true

2,Bob,user,false

YAML:

users:

- id: 1

name: Alice

role: admin

active: true

- id: 2

name: Bob

role: user

active: false

Character count:

- TOON: 64 characters

- JSON: 156 characters (2.4x more)

- CSV: 46 characters (but no structure metadata)

- YAML: 118 characters

Format Showdown: When to Use What

Here’s the no-BS decision matrix:

Use TOON When:

✅ You’re sending structured data to LLMs

✅ You have arrays of similar objects (uniform schema)

✅ Token costs are a concern

✅ You need explicit schema validation

✅ Your data is tabular or semi-tabular

Perfect for: RAG retrieval results, agent memory logs, batch user data, event streams, metrics with metadata

Use JSON When:

✅ You need maximum compatibility

✅ Your data is deeply nested and irregular

✅ You’re building APIs for other systems

✅ Token cost isn’t your primary concern

✅ The LLM’s JSON familiarity matters

Perfect for: REST APIs, config with mixed types, one-off prompts, debugging/development

Use CSV When:

✅ Your data is purely flat/tabular

✅ You don’t need field type information

✅ Maximum compression is critical

✅ You’re fine with no nesting

Perfect for: Simple logs, metrics dashboards, spreadsheet exports, time-series data

Use YAML When:

✅ Humans need to read and edit the file

✅ You’re writing config files

✅ Structure and comments matter

✅ It won’t go directly to an LLM

Perfect for: CI/CD configs, infrastructure-as-code, application settings, documentation

Real Numbers: The TOON Savings Calculator

Let’s get specific with a real-world scenario.

Scenario: E-commerce RAG System

You’re building product search with RAG. Each query retrieves 50 products with this schema:

- SKU (string)

- Name (string)

- Price (float)

- Category (string)

- In Stock (boolean)

- Reviews Count (integer)

JSON payload:

{

"products": [

{"sku": "A101", "name": "Wireless Mouse", "price": 29.99, "category": "Electronics", "in_stock": true, "reviews": 142},

// ... 49 more products

]

}

TOON payload:

products[50]{sku,name,price,category,in_stock,reviews}:

A101,Wireless Mouse,29.99,Electronics,true,142

...

Token comparison (using GPT-4 tokenizer):

- JSON: ~1,400 tokens

- TOON: ~600 tokens

- Savings: 57%

Monthly cost impact (1M requests):

- JSON: $42,000/month

- TOON: $18,000/month

- You just saved: $24,000/month

That’s a junior engineer’s salary. Or a serious beer budget.

Use Case Battle Royale

RAG (Retrieval-Augmented Generation)

The Challenge: You’re pulling chunks from a vector database with metadata. The metadata bloats your prompt.

Winner: TOON

Why: Retrieval results are uniform (same schema for every chunk). TOON’s tabular format cuts tokens by 40-60% while maintaining all metadata.

chunks[100]{id,score,title,author,date,content}:

c1,0.94,Getting Started,Sarah,2025-01-15,Content here...

c2,0.91,Advanced Guide,Mike,2025-02-03,More content...

...

Runner-up: JSON (if you need complex nested metadata)

Avoid: CSV (loses type information and is hard to parse back)

AI Agent Memory & State

The Challenge: Your agent needs to remember conversation history, retrieved facts, and current state. This accumulates fast.

Winner: TOON

Why: Agent state often includes logs, events, and structured records. TOON keeps memory compact while explicit lengths help the agent validate its own state.

conversation[5]{turn,role,topic,timestamp}:

1,user,product_search,2025-11-17T10:30:00

2,agent,search_executed,2025-11-17T10:30:02

3,user,refine_query,2025-11-17T10:30:15

...

Runner-up: JSON (for complex state machines)

Prompt Engineering with Examples

The Challenge: Few-shot learning requires multiple examples. JSON examples eat your context window.

Winner: TOON

Why: You can fit 2x more examples in the same token budget, improving few-shot accuracy.

Runner-up: CSV (if examples are truly flat)

Configuration & Policies

The Challenge: Your LLM needs to reference pricing tables, policy matrices, or rule sets.

Winner: Tie between TOON and YAML

- TOON if the config is tabular and will go directly to the LLM

- YAML if humans need to edit it and it’s nested/complex

Avoid: JSON (too verbose for large policy tables)

Metrics & Analytics

The Challenge: You want your LLM to analyze usage metrics, error logs, or performance data.

Winner: TOON (structured metrics) or CSV (pure time-series)

Why: Both are compact. TOON adds schema awareness that helps LLMs interpret the data correctly.

metrics[24]{hour,requests,errors,latency_p95}:

0,1423,3,245

1,1556,1,198

2,1234,0,156

...

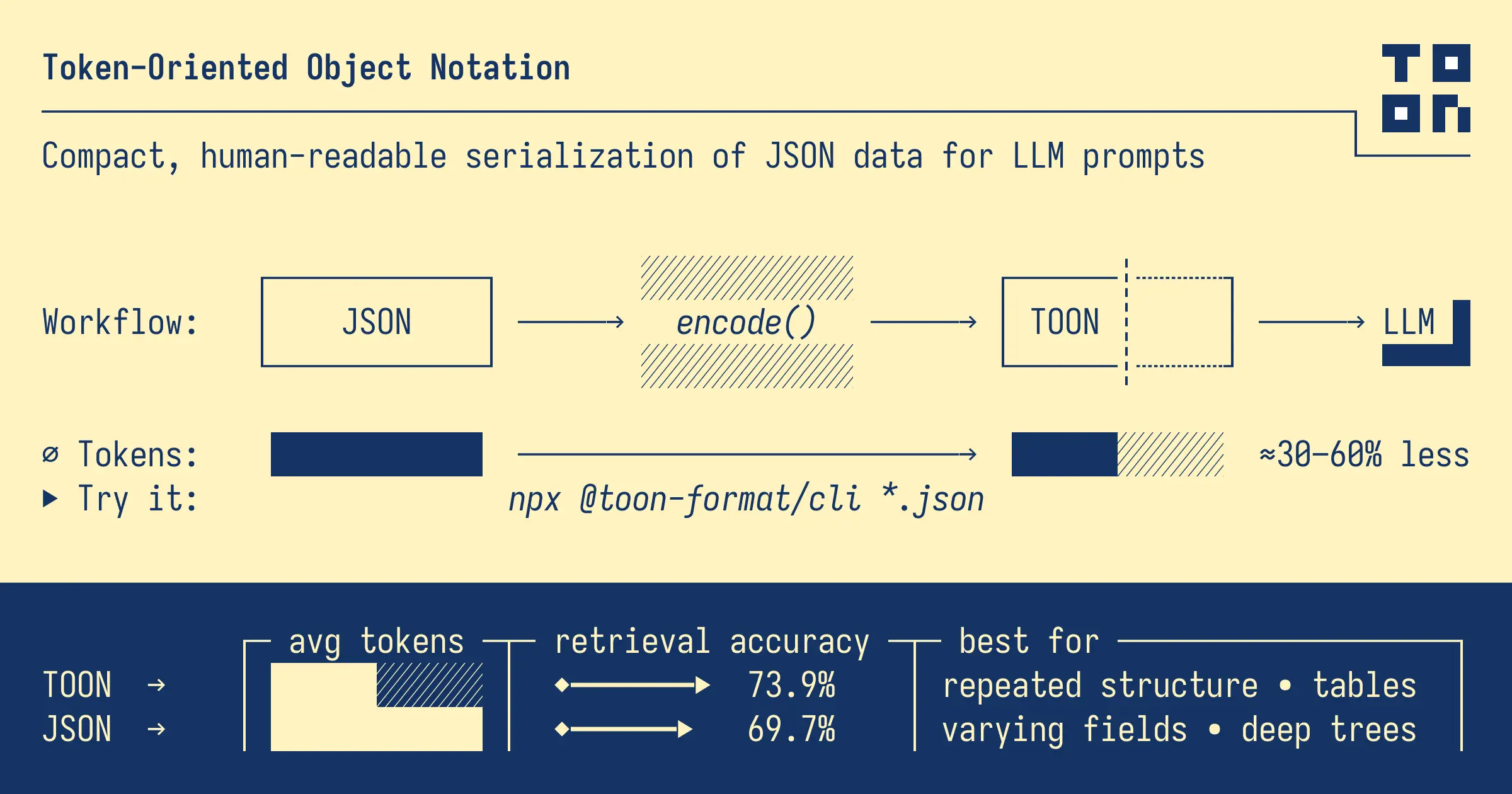

The Visual Proof

Remember those images? Let’s break down what they’re showing.

Image 1: Product Inventory Comparison

JSON version:

- Full of repeated keys:

"sku","name","price"etc. - Lots of structural syntax:

{,},[,]," - 378 characters for a simple product list

TOON version:

- Schema declared once at top

- Pure data in rows

- 128 characters for the same information

- 66% size reduction

This isn’t a cherry-picked example. This is typical for any uniform dataset.

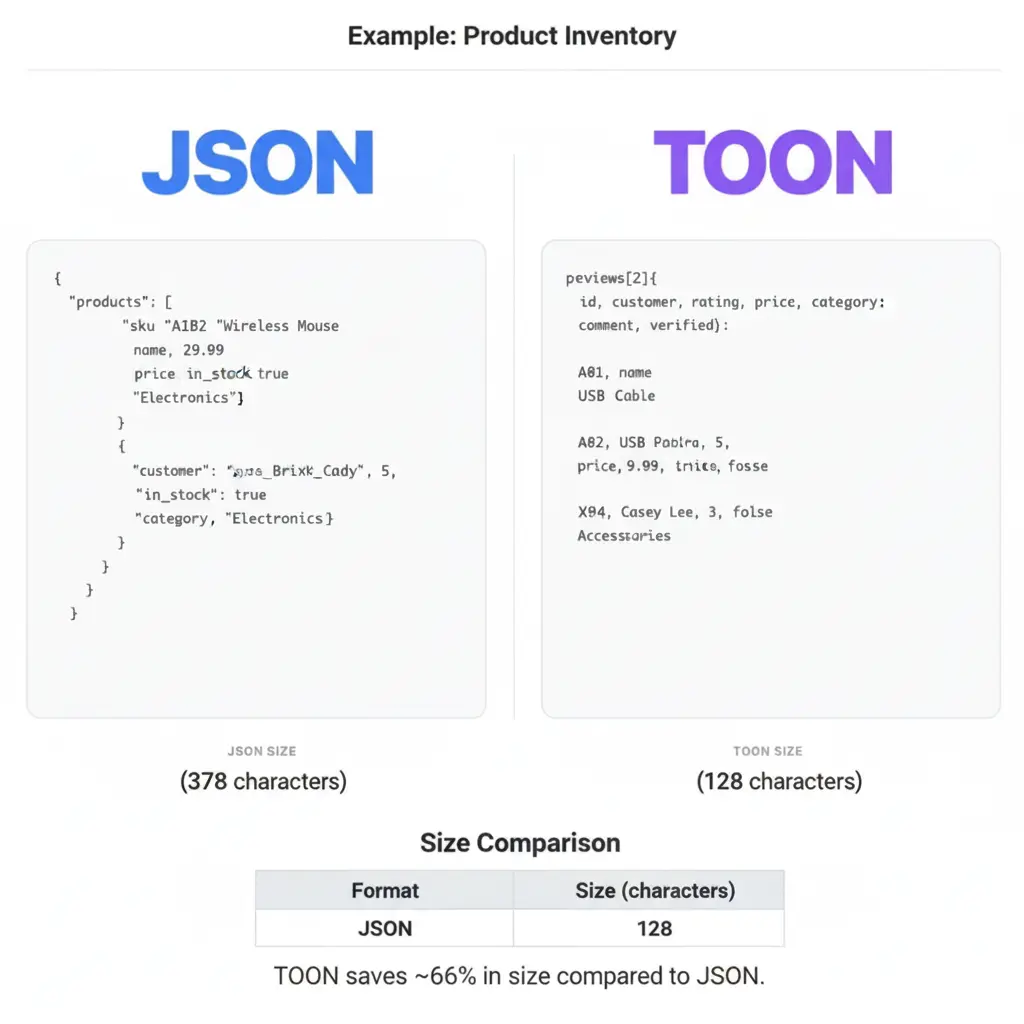

Image 2: The Workflow & Benchmarks

The key insights:

- Workflow: JSON → encode() → TOON → LLM (and back if needed)

- Token savings: 30-60% less on average

- Retrieval accuracy: 73.9% (TOON) vs 69.7% (JSON) in benchmarks

- Best use case: Repeated structures and tables

That retrieval accuracy improvement is subtle but real. Explicit schema helps LLMs parse data more reliably.

How to Actually Implement TOON

Enough theory. Here’s how you do this in practice.

Step 1: Install the Library

Python:

pip install toon-llm

JavaScript/TypeScript:

npm install @toon-format/core

Other languages:

- Java: JToon

- Rust: toon-rs

- Go: go-toon

Step 2: Convert Your Data

Python Example:

from toon import encode_toon, decode_toon

import json

# Your existing JSON data

data = {

"users": [

{"id": 1, "name": "Alice", "role": "admin"},

{"id": 2, "name": "Bob", "role": "user"}

]

}

# Convert to TOON for LLM prompt

toon_string = encode_toon(data)

print(toon_string)

# Output: users[2]{id,name,role}:\n1,Alice,admin\n2,Bob,user

# Send to LLM

prompt = f"Analyze these users:\n{toon_string}"

response = llm_call(prompt)

# Convert back if needed

original_data = decode_toon(toon_string)

JavaScript Example:

import { encode, decode } from '@toon-format/core';

const data = {

products: [

{ sku: 'A101', name: 'Mouse', price: 29.99 },

{ sku: 'A102', name: 'Keyboard', price: 79.99 }

]

};

// Convert to TOON

const toonString = encode(data);

console.log(toonString);

// Use in prompt

const prompt = `Analyze these products:\n${toonString}`;

const response = await callLLM(prompt);

// Parse response back if LLM returns TOON

const parsed = decode(response);

Step 3: Benchmark Your Use Case

Don’t just take my word for it. Measure your actual savings:

import tiktoken # OpenAI's tokenizer

# Your data

json_string = json.dumps(data)

toon_string = encode_toon(data)

# Count tokens

encoder = tiktoken.encoding_for_model("gpt-4")

json_tokens = len(encoder.encode(json_string))

toon_tokens = len(encoder.encode(toon_string))

print(f"JSON: {json_tokens} tokens")

print(f"TOON: {toon_tokens} tokens")

print(f"Savings: {100 * (1 - toon_tokens/json_tokens):.1f}%")

Step 4: Integrate Gradually

Smart rollout strategy:

- Start with RAG retrieval results (usually the biggest win)

- Move to agent memory/state next

- Convert batch prompts with tabular data

- Leave irregular data in JSON (don’t force it)

You don’t need to convert everything. Focus on the 20% of use cases that give you 80% of the savings.

Step 5: Monitor & Iterate

Track these metrics:

- Token count per request (before/after)

- API cost per day/week

- Response accuracy (does TOON change quality?)

- Parsing errors (TOON should reduce these)

The Honest Trade-Offs

I’m not going to sell you a perfect solution because it doesn’t exist. Here are TOON’s real limitations:

1. Learning Curve

Your team needs to learn new syntax. It’s not hard (seriously, it’s simpler than YAML), but it’s different. Budget time for onboarding.

Mitigation: Start with one use case, create internal docs, and demonstrate the cost savings to get buy-in.

2. LLM Training Bias

Models have seen way more JSON in training than TOON. For deeply nested or irregular data, JSON’s familiarity can give slightly better accuracy.

Mitigation: Use TOON for uniform/tabular data where it shines. Keep JSON for complex nested structures.

3. Tooling Maturity

TOON is newer than JSON. While solid libraries exist, the ecosystem is smaller.

Mitigation: Stick to official libraries (toon-llm, @toon-format/core) which are production-ready.

4. Not a Silver Bullet

If your data is highly irregular (every object has different fields), TOON’s advantages shrink. It’s optimized for patterns and repetition.

Mitigation: Profile your data first. If schema varies wildly, JSON might be better.

5. Edge Cases

Some LLM output parsing gets trickier if the model returns TOON. You need to handle both formats.

Mitigation: Be explicit in prompts: “Return your analysis as JSON” or “Return results in TOON format.”

Quick Reference Cheat Sheet

TOON Syntax Primer

Basic array:

items[3]{id,name}:

1,Apple

2,Banana

3,Cherry

Nested structure (key folding):

data.users[2]{id,email}:

101,alice@example.com

102,bob@example.com

Different delimiters:

# Tab-delimited (might save more tokens)

records[2]{id name score}:

1 Alice 95

2 Bob 87

# Pipe-delimited

records[2]{id|name|score}:

1|Alice|95

2|Bob|87

Mixed types:

products[2]{sku,price,in_stock,tags}:

A101,29.99,true,"electronics,wireless"

A102,49.99,false,"electronics,gaming"

Conversion Cheatsheet

| Task | Python | JavaScript |

|---|---|---|

| Install | pip install toon-llm | npm install @toon-format/core |

| Encode | encode_toon(data) | encode(data) |

| Decode | decode_toon(string) | decode(string) |

| Custom delimiter | encode_toon(data, delimiter='\t') | encode(data, { delimiter: '\t' }) |

When to Use What (Quick Decision Tree)

Is this data going to an LLM?

├─ No → Use JSON (it's fine)

└─ Yes → Is it tabular/uniform?

├─ Yes → Use TOON (big savings)

└─ No → Is it deeply nested?

├─ Yes → Use JSON (LLM familiarity helps)

└─ No → Is token cost critical?

├─ Yes → Try TOON, benchmark it

└─ No → Use JSON (easier for now)

Your Next Steps

Alright, you’ve made it this far. Here’s what you actually need to do:

If You’re Convinced (Start Today):

1. Pick One Use Case

- RAG retrieval results (easiest win)

- Agent memory logs

- Batch processing prompts

2. Install & Test

pip install toon-llm

3. Convert 10 Examples

- Take real production data

- Convert to TOON

- Count tokens (use tiktoken)

- Calculate savings

4. Run a 1-Week Experiment

- Deploy TOON for that one use case

- Monitor: costs, accuracy, errors

- Compare against JSON baseline

5. Scale What Works

- Roll out to similar use cases

- Train team on syntax

- Build internal tools/templates

If You’re Still Skeptical (Smart Move):

1. Try the Playground

- Visit the TOON web playground

- Paste your actual production JSON

- See character/token comparison

- No installation required

2. Read the Benchmarks

- Check the official TOON docs

- Look for use cases similar to yours

- Read community reports on GitHub

3. Ask “What’s My Biggest Pain?”

- Running out of context window? → TOON helps

- API costs killing you? → TOON helps

- Irregular data, small prompts? → JSON is fine

Resources to Bookmark

Official:

- TOON Spec: [github.com/toon-format/spec]

- Python Library: [pypi.org/project/toon-llm]

- JS Library: [npmjs.com/package/@toon-format/core]

Community:

- TOON Discord: Where practitioners share real-world results

- GitHub Discussions: Ask questions, see integrations

Tools:

- Web Playground: Test conversions instantly

- VS Code Extension: Syntax highlighting for .toon files

The Bottom Line

Here’s what I want you to remember:

TOON isn’t about being cool or trendy. It’s about solving a real, expensive problem: LLMs charge by the token, and traditional formats waste 30-60% of those tokens on repetitive structure.

For the right use cases—uniform data, tabular structures, RAG results, agent memory—TOON is a no-brainer. You get:

- Lower costs (immediately)

- Bigger context budgets (fit more data)

- Better reliability (explicit schemas reduce errors)

For other use cases—highly irregular data, deeply nested structures, one-off prompts—stick with JSON. It’s mature, universal, and sometimes that’s exactly what you need.

The smart play? Don’t rewrite your whole stack. Pick one high-volume use case, convert it to TOON, measure the savings, and expand from there.

Because at the end of the day, DevOps for PMs isn’t about using the newest tech. It’s about shipping products that work, scale efficiently, and don’t bankrupt you in the process.

TOON helps you do that.

Try it. Measure it. Ship it.

Your API bill will thank you. And if you save enough money, maybe you can finally convince your finance team to approve that team offsite.

Worth a shot, right?

Want more brutally honest takes on AI infrastructure and product development? That’s what we do here at DevOps for PM. Subscribe for weekly deep-dives on the tools and techniques that actually matter.

Questions? Thoughts? Hit me up—I actually read the comments.

Now go save some money. 🚀